In my previous post I discussed sending messages to a Service Bus queue. These messages send to the queue are then picked up by the SB-Messaging Adapter for BizTalk Server 2010 R2 CTP, which is running in a VM on Windows Azure. BizTalk will then process these messages by inserting them into a table.

Service Bus Messaging: Queues, SB-Messaging Adapter

I used one client to send multiple messages to the queue. I measured the time it took to send messages to the queue not the time it took for BizTalk to insert each message in SQL Server 2008 R2 table. However, the latency between the queue and SQL Server 2008 R2 was relatively low. As soon as all the messages were sent the same amount of records were present in the table. Overall the performance is not bad when it comes to a few thousand messages. Latency and throughput are good.

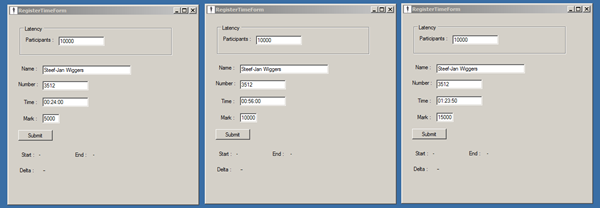

In this post I take a different approach. I will simulate a run with 10000 participants. The run is 15K and there are passing time marks at 5 and 10K. At 15K the finishing time will be registered. I will use multiple clients to send messages to Service Bus queue. I will use one client sending 10000 messages for 5K passing mark, one sending 10000 messages for 10K passing mark and one client for 15K finishing times.

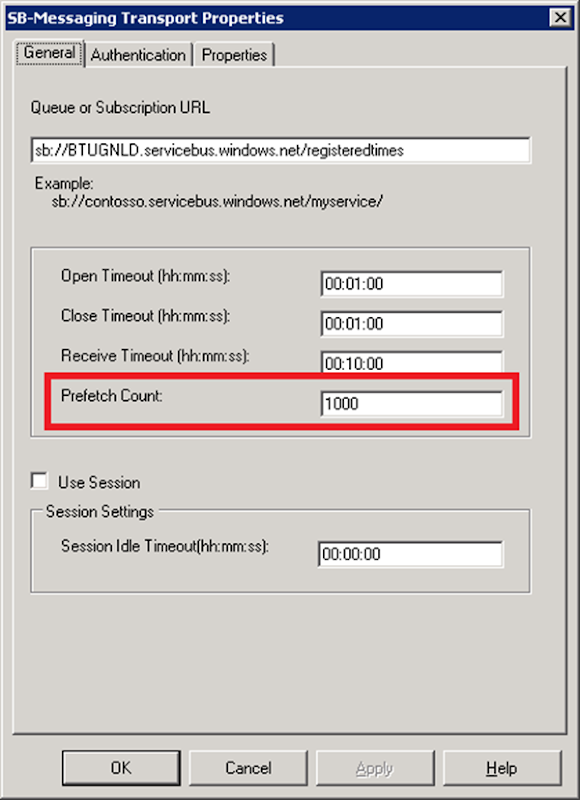

I have changed some settings like batch size for WCF-SQL Adapter, which I set to a 1000. For SB-Messaging I have changed the Prefetch Count. I also modified the polling interval of processing host to 50 ms. These are just experimental to increase performance (latency and throughput).

Service Bus Messaging: Queues, SB-Messaging Adapter

The Prefetch Count specifies the number of messages that are received simultaneously from the Service Bus Queue or a topic. I set this initially to a 1000 to observe what will happen in my BizTalk environment regarding throughput. More on prefetching see the Best Practices for Performance Improvements Using Service Bus Brokered Messaging.

| Number of clients | Number of message per client | Number of messages | Duration (Latency) in seconds | Msg/Sec |

| 3 | 10000 | 30000 | 2025 | 14.81 |

| 6 | 5000 | 30000 | 1430 | 20.98 |

| 9 | 3333 | 30000 | 968 | 30.99 |

| 12 | 2500 | 30000 | 556 | 53.96 |

With nine clients the duration to send messages to queue was less 1000 seconds, however BizTalk started throttling and finished processing a 180 seconds later. Still processing of 30000 messages was around 21 minutes. I changed the polling interval of the processing host back to its default of 500 ms. With subsequent test BizTalk throttled again after a few minutes.

BizTalk host BizTalkServerApplication throttled because InflightMessageCount exceeded the configured throttling limit.

With more clients the latency to send message to queue decreases. However, the throughput at the BizTalk end decreases and so does the latency for this complete process. This means performance decreases as BizTalk is not optimized to process that kind of load. Therefore tuning in the dashboard will be required to optimize BizTalk if possible. However, it may be better to consider to scale vertically with VM assigning more cores and memory (i.e. large or extra-large instance) or scale horizontally adding more instances of BizTalk. Scaling will mean incurring more cost as well.

See Pricing Details Windows Azure.

With more clients and more queues you will be able to send up to a 100 or more messages per second to the Service Bus queues (see Scenarios paragraph of Best Practices for Performance Improvements Using Service Bus Brokered Messaging). This can be done easily. However your backend systems (i.e. BizTalk in this scenario) must be able to read and process the message in case performance (high throughput) is a requirement. So scalability will be an important quality attribute in this kind of scenarios. In upcoming posts I will delve into this scenario to further experiment with SB-Queues and Topics.

Service Bus Messaging: Queues, SB-Messaging Adapter